[ALL PROJECTS]

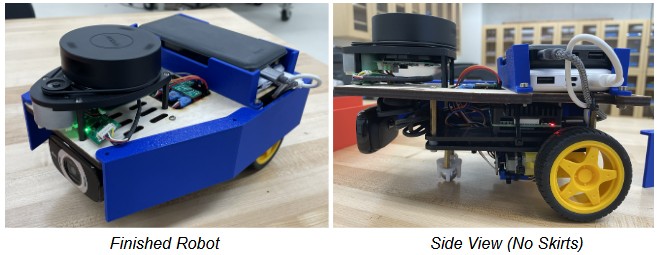

Implemented custom ROS2 nodes for motor control, odometry, and sensor fusion on a Raspberry Pi, achieving 5cm navigation accuracy via RRT path planning and lidar scan-matching (>80% drift reduction) and designed a state machine for dynamic task prioritization, processing real-time scan data and environmental inputs to optimize robot behavior.

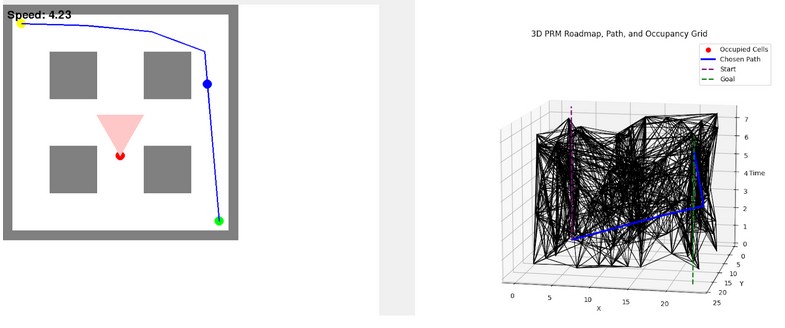

Developed a 2D autonomous agent in Python that captures a flag and returns while avoiding collisions in a dynamic environment with static and predicted dynamic obstacles. Implemented 3 motion planners: 2D RRT, 3D RRT with time coordination, and PRM with A* and a nonlinear cost function penalizing proximity to enemy FOV (cone-based, angle + range buffered). Built a dual-visualization GUI (Tkinter + Matplotlib + Pygame) for real-time path-following and occupancy grid monitoring, supporting iterative debugging and planner validation. Utilized Shapely, NumPy, and custom enemy motion predictors to model path safety margins and enforce time-consistent segment velocities across variable terrain.

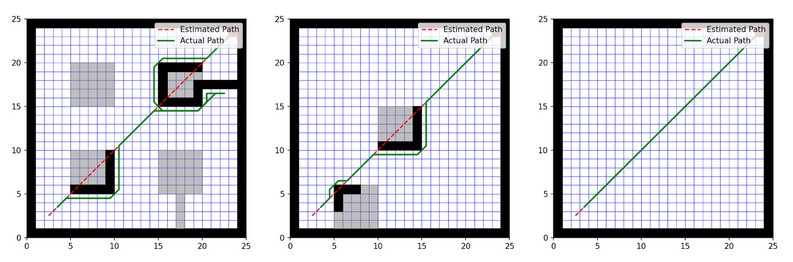

Implemented a real-time path planning system using D* Lite and A* search in Python, enabling dynamic replanning in response to environmental changes with sub-50ms update times. Modeled the environment with a grid-based occupancy map and integrated enemy field-of-view constraints to ensure safe, collision-free navigation under uncertainty. Built an interactive visualization interface using Matplotlib, Pygame, and Tkinter to display robot movement, obstacle dynamics, and trajectory adjustments in real time.

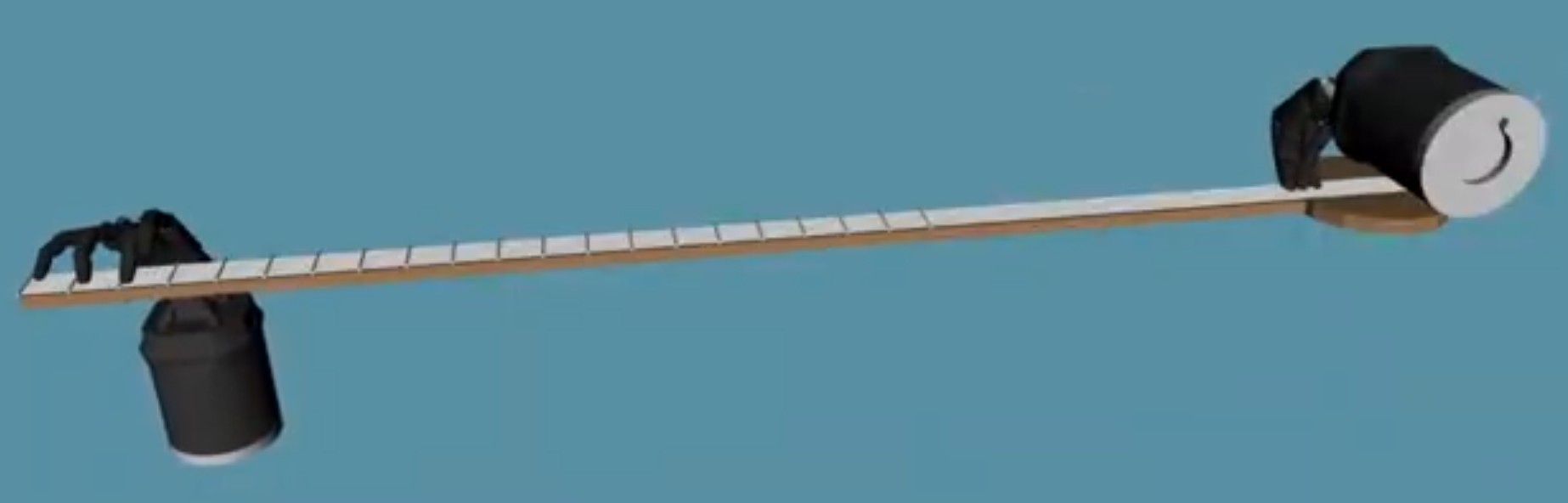

Engineered a 45-DOF bimanual robotic system using the Shadow Robot Hand URDF in ROS, implemented damped least-squares inverse kinematics for task-prioritized motion planning, and wrote algorithms for chord progression and strumming patterns within ROS.

Built a dual-mode vision system (PyTorch CNN eye detection + adaptive HSV object tracking) with >85% accuracy, implemented quartic spline motion smoothing, and designed an optimized 3D-printed pan/tilt platform.